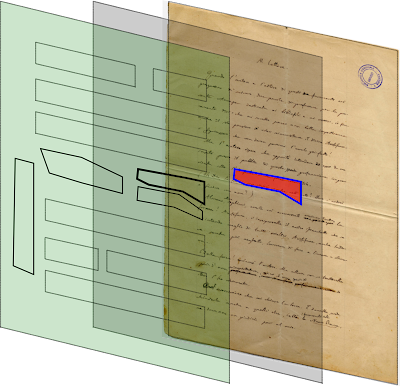

Critical editions, with their explanatory notes at the foot of the page, 'the apparatus', which described the variants of the version printed as the 'main' text above it were very popular in print editions of classical authors and scholarly editions of early printed books, from the late 18th century on. But in the digital medium this view, so dependent on print for its effectiveness, has struggled to make an impact, other than to evoke the response that this is a fake or copy of a print edition on screen. The apparatus showed a cross-section of versions that differ from the printed base text, but the connection between the two was via line-numbers. Unfortunately this does not work well on screen. Since the text is not divided into pages the line-numbers would get too large. In any case the idiom of the screen is based on clicks and highlighting rather than numbering. In prose texts, unless the reader were satisfied with the line-breaks of a particular print edition (and its hyphenation), numbering the lines would not even be possible. Another problem is that an auto-generated (versus hand-edited) apparatus is either too detailed to be readable or is filtered, so that important small variants may be lost. A more natural way of displaying variation on screen has to be found.

Side-by-side

On screen, this is the most popular view for displaying multiple versions, e.g. the Online Froissart and Juxta Commons. Usually just two versions are shown, and the user can choose which two to compare. Some versions of this view allow the user to choose multiple versions for display, but it gets a bit confusing after two because only the differences between each pane and one designated base can be shown. But fundamentally what is missing in this view is an ability to show all (or a subset of) versions in one go.

Table view

Another way to display variants is in a table. This aligns all the variants one above the other wherever they correspond. Editors love this view, because it resembles the apparatus of the critical edition, but there are only a few examples on the Web, usually as a window that floats above the text and so obscures it.

The 'killer app'

It seems obvious to combine table view with a view containing one chosen version at a time, to act as the base. It is also fairly obvious that the table should scroll horizontally in sync with the main text. Our project supervisor called this a bit hyperbolically 'a killer app'. It may be observed, however, that:

- Using table view in this way has never been done before.

- Table view is more adapted to the digital medium and the screen than the apparatus.

- It is easy to use because the horizontal scrolling of the table matches the vertical scrolling of the main text, and the highlighting shows what's in sync with what.

- The table can be collapsed out of sight or replaced by a set of options that change the appearance and size of the table (and the number of versions shown)

- The blend of the two views seems so natural that I would venture to suggest that it could be a digital replacement for the print critical edition.

Check out the first working prototype. There are still some things to be done, such as:

- making the sigla stick to the left of the scrolling table instead of scrolling away out of view

- Integrating this prototype into the main test panel so that the options work.

- Adding a popup menu to select a new base version